Navigating the Viral Database Landscape: A 2024 Guide to Content, Functionality, and FAIR Compliance for Researchers

This article provides a systematic comparison of modern viral databases, essential resources for researchers and drug development professionals. It explores the foundational principles behind these databases, evaluates their methodological applications in areas like antiviral discovery and outbreak surveillance, addresses common challenges such as data errors, and offers a validation framework for selecting the most appropriate tools. By synthesizing the latest reviews and emerging tools, this guide aims to empower scientists to effectively leverage genomic and metadata resources for virology research and public health initiatives.

Navigating the Viral Database Landscape: A 2024 Guide to Content, Functionality, and FAIR Compliance for Researchers

Abstract

This article provides a systematic comparison of modern viral databases, essential resources for researchers and drug development professionals. It explores the foundational principles behind these databases, evaluates their methodological applications in areas like antiviral discovery and outbreak surveillance, addresses common challenges such as data errors, and offers a validation framework for selecting the most appropriate tools. By synthesizing the latest reviews and emerging tools, this guide aims to empower scientists to effectively leverage genomic and metadata resources for virology research and public health initiatives.

Understanding the Virus Database Ecosystem: Types, Content, and Core Principles

The Critical Role of Virus Databases in Public Health, Ecology, and Agriculture

Virus databases are indispensable tools in modern scientific research, serving as centralized repositories that store, organize, and facilitate the analysis of viral data. The COVID-19 pandemic has dramatically highlighted their critical importance, revealing how comprehensive data resources enable rapid response to emerging threats through genomic surveillance, outbreak tracking, and therapeutic development [1]. These databases have evolved from simple sequence archives into sophisticated platforms integrating genomic, structural, epidemiological, and clinical data, providing researchers worldwide with the resources needed to tackle viral challenges across human health, ecology, and agricultural systems.

The expanding diversity of virus databases reflects the specialized needs of different research communities. Some focus on particular pathogen taxa (e.g., influenza, hepatitis), while others center on specific data types (e.g., protein structures, immune epitopes) or ecological contexts (e.g., marine viromes, prophages) [2] [3]. This article provides a comparative analysis of major virus databases, examining their content, functionality, and applications to help researchers select appropriate resources for their specific investigations.

Comparative Analysis of Major Virus Databases

Table 1: Overview of Major Virus Databases and Their Core Features

| Database Name | Primary Focus | Key Data Types | Notable Features | Use Cases |

|---|---|---|---|---|

| ViPR | Multiple human pathogenic viruses | Genome/protein sequences, structures, immune epitopes, surveillance data | Integrated analysis tools, family-specific portals | Outbreak investigation, vaccine design, comparative genomics |

| Viro3D | Protein structures | AI-predicted protein structures, structural alignments | >85,000 predicted structures for >4,400 viruses | Evolutionary studies, vaccine design, functional annotation |

| Prophage-DB | Prophages (temperate bacteriophages) | Prophage sequences, host associations, auxiliary metabolic genes | 350,000+ prophages from diverse prokaryotic hosts | Microbial ecology, horizontal gene transfer studies |

| IRD (Influenza Research Database) | Influenza viruses | Genomic sequences, epidemiological data, immune epitopes | Specialized flu-focused tools, surveillance integration | Flu surveillance, strain tracking, vaccine candidate identification |

| Global Initiative on Sharing All Influenza Data (GISAID) | Influenza viruses | Sequence data, related clinical and epidemiological data | Access-controlled resource, rapid data sharing during outbreaks | Real-time outbreak response, global surveillance |

| HBVdb | Hepatitis B Virus | Nucleotide and protein sequences, drug resistance profiles | Specialized analysis of genetic variability and drug resistance | Treatment optimization, resistance monitoring |

This comparative analysis reveals how database specialization enables targeted research applications. General-purpose resources like ViPR support broad comparative studies across virus families, while specialized databases like Viro3D and Prophage-DB enable deep investigation into specific aspects of virology [4] [5] [2]. The integration of analytical tools directly within databases has significantly accelerated research workflows, allowing scientists to move seamlessly from data retrieval to analysis without switching platforms.

Experimental Protocols for Database Utilization

Protocol 1: Virome Analysis for Pathogen Detection

High-throughput sequencing (HTS) combined with database resources has revolutionized pathogen detection and discovery. The following protocol is adapted from studies investigating plant viruses in agricultural systems and thrips vectors [6] [7]:

Sample Collection and RNA Extraction: Collect specimens from environmental, clinical, or agricultural sources. For arthropod vectors, pool multiple individuals (≥50) to ensure sufficient genetic material. Extract total RNA using standardized methods (e.g., Trizol protocol).

Library Preparation and Sequencing: Remove ribosomal RNA using depletion kits (e.g., Ribo-Zero Gold). Prepare sequencing libraries using appropriate kits for the platform (e.g., Illumina NovaSeq). Sequence using paired-end approaches (e.g., 150 bp reads) to generate sufficient depth (typically 5-10 GB raw data per sample).

Bioinformatic Processing: Quality control of raw reads using tools like Trimmomatic to remove adapters and low-quality sequences. Map reads to host genome (if available) using Bowtie2 to remove host-derived sequences. Assemble remaining reads de novo using Trinity or similar assemblers.

Viral Identification and Annotation: Compare assembled contigs against virus databases using BLASTX with E-value threshold of 1×10⁻⁵. Identify open reading frames using NCBI ORFfinder. Conduct additional homology searches using HMMER against conserved domain databases (e.g., Pfam, RdRp database).

Validation: Confirm key findings using reverse transcription PCR with specific primers and Sanger sequencing.

This HTS-based approach has enabled the discovery of novel viruses in agricultural systems, including mastreviruses in maize and teosinte in North America, and revealed complex mixed infections in crops like grapes and tomatoes [6]. Similar methodologies applied to thrips vectors identified 19 viruses, including previously undocumented species, demonstrating the power of combining HTS with comprehensive database resources [7].

Protocol 2: Structural Virology Using Predictive Databases

The integration of artificial intelligence with virus databases has transformed structural virology. The Viro3D database development exemplifies this approach [5]:

Data Curation: Compile reference protein sequences from authoritative sources (e.g., ICTV Virus Metadata Resource). Process sequences by cleaving large polyproteins into mature peptides based on GenBank annotations.

Structure Prediction: Employ multiple prediction tools: AlphaFold2-ColabFold (MSA-based) and ESMFold (language model-based). Configure computational pipelines for batch processing of thousands of proteins.

Quality Assessment: Evaluate model quality using predicted local-distance difference test (pLDDT) scores. Categorize models as very high (pLDDT >90), high (90 > pLDDT >70), low (70 > pLDDT >50), or very low quality (pLDDT <50).

Structural Clustering: Perform all-against-all structural comparisons using fold similarity metrics. Cluster proteins with similar structures to identify evolutionary relationships.

Functional Annotation: Integrate structural insights with existing functional annotations. Identify auxiliary metabolic genes and other functionally important regions based on structural features.

This protocol has expanded structural coverage of viral proteins by more than 30 times compared to experimentally determined structures, enabling insights into deep evolutionary relationships, such as the potential origin of coronavirus spike glycoproteins from aquatic herpesviruses [5].

Table 2: Database-Driven Experimental Applications Across Fields

| Application Area | Key Databases | Representative Findings | Impact |

|---|---|---|---|

| Public Health | ViPR, IRD, GISAID | Identification of emerging variants, tracking transmission patterns, epitope prediction for vaccine design | Informed public health responses during outbreaks, accelerated medical countermeasure development |

| Ecology | Prophage-DB, IMG/VR, MTVGD | Discovery of 350,000+ prophages, identification of auxiliary metabolic genes influencing biogeochemical cycles | New understanding of viral roles in microbial ecosystems and global biochemical processes |

| Agriculture | Plant Viruses Online, Virome | Detection of mixed infections in crops, identification of novel mastreviruses, tracking virus transmission by thrips vectors | Improved crop management strategies, development of diagnostic tools, preservation of agricultural productivity |

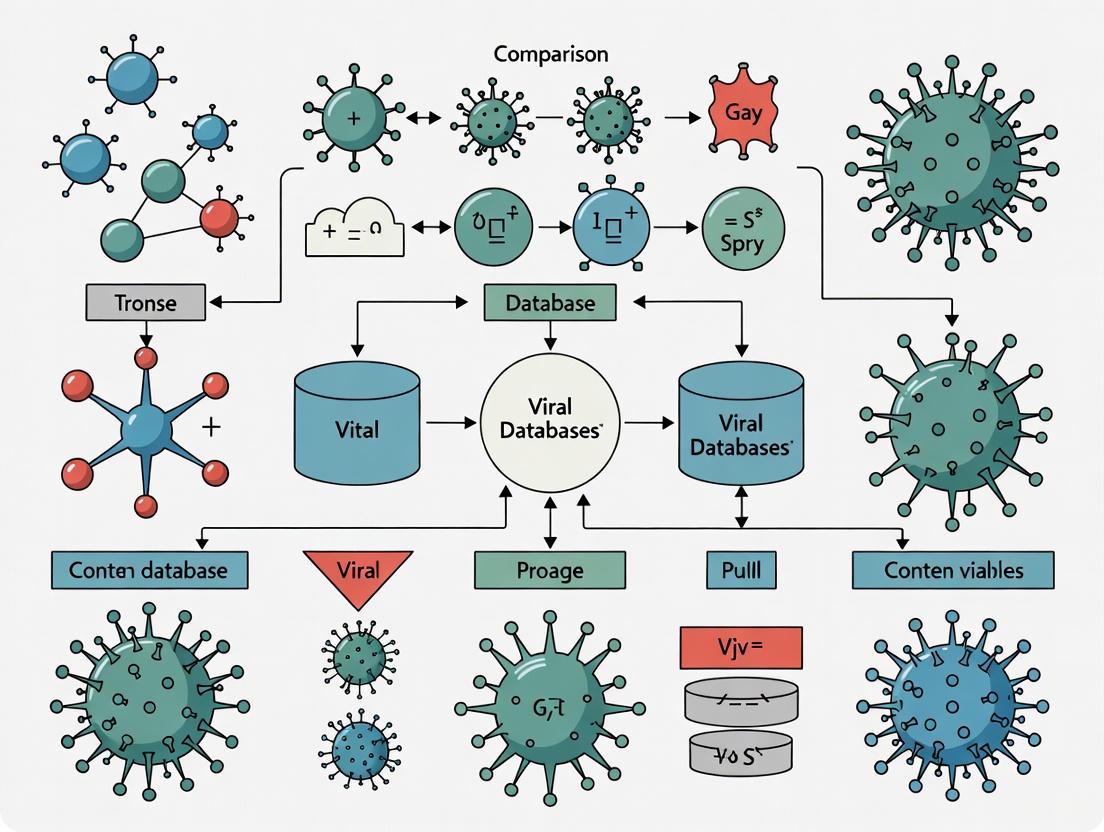

Visualization of Database Utilization Workflows

The workflow illustrates how virus databases serve as the central hub connecting field and laboratory observations with analytical processes and practical applications. Researchers can enter this cycle at multiple points—beginning with database mining to generate hypotheses or using databases to interpret newly generated data.

Table 3: Key Database Resources for Virology Research

| Resource Category | Specific Tools/Databases | Primary Function | Research Application |

|---|---|---|---|

| Comprehensive Databases | ViPR, IRD | Integrated data repository with analysis tools | Comparative genomics, outbreak investigation, vaccine design |

| Sequence Repositories | GenBank, RefSeq, UniProt | Primary sequence data storage and retrieval | Reference-based identification, phylogenetic analysis |

| Specialized Databases | Viro3D, Prophage-DB, HBVdb | Focused data types or pathogen-specific resources | Structural biology, microbial ecology, drug resistance studies |

| Analytical Tools | VIGOR, IDSeq, VirFinder | Genome annotation, pathogen detection, sequence identification | Novel virus discovery, genome annotation, metagenomic analysis |

| Surveillance Platforms | GISAID, FluNet | Global pathogen monitoring and data sharing | Real-time outbreak tracking, epidemiological studies |

This toolkit enables researchers to address diverse virological questions through complementary resources. For example, a public health researcher investigating an emerging respiratory virus might begin with GISAID for initial strain comparison, move to ViPR for detailed genomic analysis, utilize Viro3D for structural insights into key proteins, and employ IRD for accessing relevant immunological data [2].

Virus databases have evolved from simple sequence repositories to sophisticated analytical platforms that are indispensable for addressing complex challenges in public health, ecology, and agriculture. The comparative analysis presented here demonstrates that while general-purpose resources like ViPR and IRD provide broad coverage of human pathogens, specialized databases like Viro3D for protein structures and Prophage-DB for bacteriophages enable deep investigation into specific research questions.

The ongoing development and integration of these resources will be crucial for preparing for future pandemics, understanding ecosystem dynamics, and ensuring food security. As artificial intelligence and machine learning are increasingly integrated into these platforms, we can anticipate more predictive capabilities that will further accelerate discovery and application. The critical role of virus databases in global health security and scientific advancement cannot be overstated—they represent essential infrastructure for 21st-century virology.

The field of virology has experienced a data deluge, driven by advances in metagenomic sequencing and computational biology. This expansion has necessitated the development of specialized databases tailored to distinct research needs, moving beyond one-size-fits-all repositories. The landscape of virus databases has evolved to include a variety of specialized resources, each optimized for specific data types, analytical functions, and research objectives [4]. This guide provides a systematic comparison of these database specializations, offering researchers a framework for selecting appropriate resources based on content, functionality, and experimental validation.

Database Specialization Categories

Viral databases can be classified into five primary specialization categories based on their core content and analytical strengths: genomic sequence repositories, taxonomic classification systems, protein and functional databases, structural databases, and epidemiological tracking platforms. Each category addresses distinct research needs and employs specialized methodologies.

Table 1: Database Specialization Categories and Representative Examples

| Specialization Category | Primary Function | Representative Databases | Key Strengths |

|---|---|---|---|

| Genomic Sequence Repositories | Store and organize viral genome sequences | IMG/VR, NCBI Viral RefSeq, GVD, GPD | Comprehensive sequence collections, often with metadata on hosts and isolation sources [4] [8] |

| Taxonomic Classification Systems | Classify viruses into taxonomic units based on evolutionary relationships | VITAP, vConTACT2, VICTOR, VIRIDIC | Implementation of ICTV standards, handling of sequence divergence [9] |

| Protein and Functional Databases | Organize and annotate viral protein families and functions | EnVhogDB, Viro3D, pVOGs | Identification of distant homologs, functional annotation [10] [11] |

| Structural Databases | Predict and organize viral protein structures | Viro3D, AFDB, PDB | Structure-based evolutionary insights, conserved functional domains [10] [5] |

| Epidemiological Tracking Platforms | Track virus evolution and spread in near real-time | Nextstrain, NCBI Virus | Public health surveillance, outbreak monitoring, phylogenetic tracking [12] |

Comparative Performance Analysis

Taxonomic Classification Performance

Tools for taxonomic classification demonstrate variable performance across different viral groups and sequence lengths. Independent benchmarking provides crucial data for selecting appropriate classification pipelines.

Table 2: Performance Comparison of Taxonomic Classification Tools

| Tool | Methodology | Optimal Sequence Length | Reported Accuracy | Key Advantage |

|---|---|---|---|---|

| VITAP | Alignment-based with graph integration | 1,000 bp to full genomes | >0.9 accuracy, precision, and recall for family/genus level | High annotation rates across DNA and RNA viral phyla [9] |

| vConTACT2 | Gene-sharing network analysis | Near-complete genomes | High precision but lower annotation rates | Established standard for prokaryotic virus classification [9] |

| VIRIDIC | Genome-wide nucleic acid similarity | Complete genomes | High agreement with ICTV taxonomy | ICTV-recommended for bacteriophage species delineation [13] |

| Vclust | Alignment-based ANI with Lempel-Ziv parsing | Complete and fragmented genomes | 73-95% agreement with ICTV taxonomy | Superior accuracy and speed for large datasets [13] |

Virus Identification in Metagenomic Data

For identifying viral sequences within complex metagenomic datasets, benchmarking across diverse biomes reveals significant performance variations.

Table 3: Performance of Virus Identification Tools Across Biomes

| Tool | Methodology | True Positive Rate Range | False Positive Rate Range | Performance Notes |

|---|---|---|---|---|

| PPR-Meta | Convolutional neural network | 0-97% | 0-30% | Best distinction of viral from microbial contigs [14] |

| DeepVirFinder | CNN using k-mer features | 0-97% | 0-30% | High performance across biomes [14] |

| VirSorter2 | Integration of biological signals in ML framework | 0-97% | 0-30% | Effective for diverse DNA and RNA viruses [14] |

| VIBRANT | Neural network using viral protein domains | 0-97% | 0-30% | Hybrid approach combining homology and ML [14] |

| Sourmash | MinHash-based similarity | 0-97% | 0-30% | Identifies unique viral contigs missed by other tools [14] |

Experimental Protocols and Methodologies

Taxonomic Classification Workflow

The VITAP pipeline exemplifies a modern approach to viral taxonomy, combining alignment-based techniques with graph-based analysis for comprehensive classification.

Database Construction Protocol:

- Data Retrieval: Automatically downloads genomes from the ICTV Virus Metadata Resource Master Species List (VMR-MSL) from GenBank [9]

- Protein Database Generation: Creates a comprehensive viral reference protein database from retrieved genomes

- Threshold Calculation: Computes taxonomic unit thresholds using protein alignment bitscores to establish classification criteria

Taxonomic Assignment Protocol:

- Protein Alignment: Target genome proteins are aligned against the reference protein database

- Weighted Scoring: Different proteins receive different weights based on taxonomic signal strength

- Path Determination: Cumulative average calculations determine optimal taxonomic hierarchies

- Confidence Assessment: Scores are compared to thresholds to assign low/medium/high confidence levels

Large-Scale Sequence Clustering Methodology

The Vclust approach enables efficient processing of millions of viral sequences through a multi-stage workflow.

Vclust Processing Protocol:

- K-mer Based Prefiltering (Kmer-db 2):

- Uses k-mer similarity to rapidly identify related genome pairs

- Implements sparse matrices to handle millions of sequences efficiently

- Applies minimum thresholds to reduce computational burden [13]

Accurate ANI Calculation (LZ-ANI):

- Employs Lempel-Ziv parsing to identify local alignments

- Calculates overall Average Nucleotide Identity (ANI) from aligned regions

- Demonstrates mean absolute error of 0.3% compared to expected values [13]

Efficient Clustering (Clusty):

- Implements six clustering algorithms optimized for sparse distance matrices

- Applies ICTV and MIUViG standards for species demarcation

- Processes millions of genomes within hours on mid-range workstations [13]

Structural Prediction and Analysis Protocol

Viro3D exemplifies the application of machine learning for structural prediction at proteome scale.

Structural Prediction Protocol:

- Data Curation:

- Collects 4,407 virus isolates from ICTV VMR focusing on human and animal viruses

- Processes 85,162 protein records, excluding large polyproteins in favor of mature peptides [5]

Parallel Structure Prediction:

- Applies AlphaFold2-ColabFold (MSA-dependent) and ESMFold (language model-based)

- Achieves 93.1% residue coverage with ColabFold, 92.3% with ESMFold

- Expands structural coverage 30-fold compared to experimental structures [5]

Quality Assessment and Analysis:

- Evaluates model quality using pLDDT scores

- Performs structural clustering to identify distant homologs

- Enables functional annotation and evolutionary analysis [10]

The Scientist's Toolkit: Essential Research Reagents

Table 4: Key Bioinformatics Tools and Databases for Viral Research

| Tool/Database | Primary Function | Research Application | Specialization |

|---|---|---|---|

| CheckV | Assess viral genome quality | Completeness estimation and contamination identification | Genome Quality Control [8] |

| vConTACT2 | Protein cluster-based taxonomy | Network-based classification of viral sequences | Taxonomic Classification [8] [9] |

| VirSorter2 | Viral sequence identification | Detection of diverse DNA and RNA viruses in metagenomes | Virus Discovery [14] [11] |

| DeepVirFinder | Machine learning-based detection | Identification of viral sequences using k-mer patterns | Metagenomic Analysis [14] [8] |

| AlphaFold2-ColabFold | Protein structure prediction | Generation of high-confidence structural models | Structural Biology [10] [5] |

| EnVhogDB HMM profiles | Homology detection | Functional annotation of viral proteins | Protein Family Analysis [11] |

| Nextstrain Auspice | Phylogenetic visualization | Real-time tracking of virus evolution and spread | Epidemiological Surveillance [12] |

The specialization of viral databases represents a maturation of the field, moving from general-purpose repositories to purpose-built resources optimized for specific research applications. Genomic databases like IMG/VR provide comprehensive sequence collections, taxonomic systems like VITAP offer accurate classification, structural resources like Viro3D enable structure-function insights, and epidemiological platforms like Nextstrain support public health surveillance. This taxonomic framework provides researchers with a systematic approach for selecting appropriate databases based on their specific research objectives, experimental needs, and analytical requirements. As viral sequence data continues to expand exponentially, these specialized resources will play an increasingly critical role in translating raw data into biological insights, ultimately supporting drug development, vaccine design, and outbreak response.

The explosion of viral genomic data from metagenomics and viromics has dramatically expanded our understanding of viral diversity and evolution. Next-generation sequencing technologies now produce millions of viral genomes and fragments annually, creating unprecedented challenges for storage, annotation, comparison, and analysis [13]. The global COVID-19 pandemic further highlighted the critical importance of reliable viral data sharing platforms, as evidenced by controversies surrounding databases like GISAID, which faced criticism for its opaque governance and unexpected restrictions on data access [15]. These developments have accelerated innovation in both database architectures and the analytical tools that process viral genomic information.

Viral genomic databases serve as essential infrastructure for modern pathogen research, enabling everything from real-time variant tracking during pandemics to the computational design of antiviral drugs and vaccines [15] [16]. The functional utility of these databases depends on three core components: the genomic sequences themselves, the annotation metadata describing gene structures and functional elements, and the vital metadata encompassing source information, sampling data, and taxonomic classifications. This guide examines the leading databases and annotation tools, comparing their performance, accuracy, and suitability for different research applications in viral genomics.

Database Platforms and Annotation Tools Comparison

Major Viral Sequence Database Platforms

The landscape of viral genomic databases includes both general-purpose nucleotide repositories and specialized platforms optimized for particular research communities. The International Nucleotide Sequence Database Collaboration (INSDC), comprising NCBI, ENA, and DDBJ, represents the most comprehensive approach with efficient data sharing between nodes but has been characterized by a near-total lack of governance and features like anonymous downloads that may limit accountability [15]. In contrast, GISAID emerged as a specialized platform initially for influenza data that expanded rapidly during the COVID-19 pandemic, though its restrictions on data access and reuse have generated controversy [15].

Emerging alternatives like Pathoplexus offer open-source, scientist-led approaches with governance based on the LISTEN principles, which ensure open but traceable access to digital sequence information (DSI) [15]. For structural virology, Viro3D has recently emerged as the most comprehensive human virus protein database, containing high-quality structural models for 85,000 proteins from 4,400 human and animal viruses using AI-powered predictions [16]. This expands current structural knowledge by 30 times and has already revealed previously unknown information, such as the genetic ancestry of SARS-CoV-2 proteins potentially originating from ancestral herpesviruses [16].

Table 1: Comparison of Major Viral Genomic Database Platforms

| Database Platform | Primary Focus | Governance Model | Key Features | Limitations |

|---|---|---|---|---|

| INSDC (NCBI, ENA, DDBJ) | Comprehensive nucleotide data | Multinational collaboration | Efficient data sharing between nodes; largest volume | Limited governance; anonymous downloads [15] |

| GISAID | Influenza & pandemic viruses | Independent nongovernmental | Promotes equitable collaboration; rapid deposition | Opaque governance; access restrictions [15] |

| Pathoplexus | General pathogen DSI | Open-source, scientist-led | LISTEN principles for traceable access; uses Loculus software | Limited funding; participation in PABS system not guaranteed [15] |

| Viro3D | Viral protein structures | Academic (MRC-University of Glasgow) | AI-predicted structures; 85,000 proteins from 4,400 viruses | New platform; established in 2025 [16] |

| IMG/VR | Viral metagenomes | Academic (DOE Joint Genome Institute) | 15+ million virus contigs; ecosystem context | Specialized focus [13] |

Performance Comparison of Viral Genome Clustering Tools

As viral datasets expand exponentially, efficient clustering tools have become essential for taxonomic classification and duplicate removal. Vclust, introduced in 2025, represents a significant advancement with its Lempel-Ziv parsing-based algorithm (LZ-ANI) that identifies local alignments and calculates overall Average Nucleotide Identity (ANI) from aligned regions [13]. When benchmarked against established tools, Vclust demonstrated superior accuracy and efficiency, clustering millions of genomes in hours rather than days on mid-range workstations.

In comprehensive evaluations using 10,000 pairs of phage genomes containing simulated mutations, Vclust achieved a mean absolute error (MAE) of just 0.3% for total ANI (tANI) estimation, outperforming VIRIDIC (0.7% MAE), FastANI (6.8% MAE), and skani (21.2% MAE) [13]. For bacteriophage species groupings (tANI ≥ 95%), Vclust showed 73% agreement with official International Committee on Taxonomy of Viruses (ICTV) taxonomy, compared to 69% for VIRIDIC, 40% for FastANI, and 27% for skani [13]. After excluding inconsistencies in ICTV taxonomic proposals, Vclust's agreement improved to 95%, surpassing VIRIDIC (90%) and other tools [13].

Perhaps most impressively, Vclust processed the entire IMG/VR database of 15,677,623 virus contigs while performing sequence identity estimations for approximately 123 trillion contig pairs and alignments for ~800 million pairs, resulting in 5-8 million virus operational taxonomic units (vOTUs) [13]. This massive computation was completed >115× faster than MegaBLAST, >6× faster than skani or FastANI, and approximately 1.5× faster than MMseqs2 [13].

Table 2: Performance Metrics of Viral Genome Clustering Tools

| Tool | tANI MAE | Species Agreement with ICTV | Processing Speed | Key Algorithm |

|---|---|---|---|---|

| Vclust | 0.3% | 73% (95% after curation) | 115× faster than MegaBLAST | LZ-ANI alignment [13] |

| VIRIDIC | 0.7% | 69% (90% after curation) | Reference baseline | Alignment-based [13] |

| FastANI | 6.8% | 40% | 6× slower than Vclust | k-mer sketching [13] |

| skani | 21.2% | 27% | 6× slower than Vclust | Sparse approximate alignment [13] |

| MegaBLAST+anicalc | ~1.0% | Not reported | 115× slower than Vclust | BLAST-based alignment [13] |

Accuracy and Speed of Variant Annotation Tools

Variant annotation represents another critical bottleneck in viral genomics pipelines, particularly with the expansion of large-scale population studies. A 2022 performance evaluation study compared three variant annotation tools—Alamut Batch, Ensembl Variant Effect Predictor (VEP), and ANNOVAR—benchmarked against a manually curated ground-truth set of 298 variants from a clinical laboratory [17]. VEP produced the most accurate variant annotations, correctly calling 297 of the 298 variants (99.7% accuracy), while Alamut Batch correctly called 296 variants, and ANNOVAR exhibited the greatest number of discrepancies with only 93.3% concordance with the ground-truth set [17]. The study attributed VEP's superior performance to its usage of updated gene transcript versions within the algorithm [17].

More recent developments include Illumina Connected Annotations, which was selected by major population studies including the All of Us Research Program and UK Biobank due to its exceptional performance at scale [18]. In accuracy benchmarks against nearly 9 million variants from whole-genome and whole-exome sequencing, Illumina Connected Annotations achieved 100% accuracy for HGVS genomic notation, 99.997% for coding notation, and 99.998% for protein notation, matching or slightly outperforming VEP across categories [18].

For processing speed, Illumina Connected Annotations annotated a whole-genome germline VCF containing approximately 6.5 million variants in significantly less time than comparable tools when run on identical cloud-based hardware (AWS EC2 c5.4xlarge) [18]. The UK Biobank successfully annotated their entire dataset of 500,000 whole-genome multi-sample variant call files in approximately 90 minutes using this tool [18].

Table 3: Performance Comparison of Variant Annotation Tools

| Annotation Tool | HGVS Genomic Accuracy | HGVS Coding Accuracy | HGVS Protein Accuracy | Processing Speed (6.5M variants) |

|---|---|---|---|---|

| Illumina Connected Annotations | 100% | 99.997% | 99.998% | Fastest (benchmark) [18] |

| VEP | 99.970% | 99.991% | 99.998% | ~2× slower than Illumina [18] |

| SnpEff | Not reported | 99.962% | 99.988% | ~3× slower than Illumina [18] |

| ANNOVAR | Not reported | 99.981% | 99.988% | ~4× slower than Illumina [18] |

| Alamut Batch | Not reported | ~99.3% (est.) | ~99.3% (est.) | Not reported [17] |

Experimental Protocols and Methodologies

Benchmarking Viral Genome Clustering Tools

The evaluation methodology for viral genome clustering tools follows rigorous benchmarking protocols established in recent literature [13]. The standard experiment involves calculating average nucleotide identity (ANI) measures for complete and fragmented viral genomes followed by clustering according to thresholds endorsed by the International Committee on Taxonomy of Viruses (ICTV) and Minimum Information about an Uncultivated Virus Genome (MIUViG) standards [13].

Experimental Protocol:

- Dataset Preparation: Curate a diverse set of viral genomes, typically including both reference sequences and metagenomic contigs. The IMG/VR database with 15,677,623 virus contigs represents an appropriate test set for large-scale evaluations [13].

- Mutation Simulation: Generate 10,000 pairs of phage genomes containing simulated mutations including substitutions, deletions, insertions, inversions, duplications, and translocations to establish ground truth ANI values [13].

- Tool Execution: Run each clustering tool (Vclust, VIRIDIC, FastANI, skani) with default parameters optimized for viral genome comparison.

- Accuracy Assessment: Compare tool-generated tANI values against expected values to calculate mean absolute error (MAE). Vclust achieved an MAE of 0.3% in recent benchmarks [13].

- Taxonomic Agreement: Evaluate species groupings (tANI ≥ 95%) and genus groupings (tANI ≥ 70%) against official ICTV taxonomy to measure biological relevance [13].

- Scalability Testing: Process the entire dataset and measure runtime, memory usage, and clustering quality on standard mid-range workstations [13].

Validation Metrics:

- Total ANI (tANI): Overall nucleotide identity across the entire genome

- Aligned Fraction (AF): Percentage of genome aligned in pairwise comparisons

- Mean Absolute Error (MAE): Difference between calculated and expected ANI values

- Taxonomic Agreement: Consistency with ICTV classifications at species and genus levels

Evaluating Variant Annotation Accuracy

The accuracy of variant annotation tools is typically assessed using manually curated ground-truth datasets with variants independently verified through orthogonal methods [17] [18]. The experimental protocol focuses on conformity with Human Genome Variation Society (HGVS) nomenclature standards across genomic, coding, and protein sequence annotations.

Experimental Protocol:

- Ground-Truth Curation: Compile a set of 298+ variants previously classified and reviewed in clinical reports, with orthogonal confirmation using Sanger sequencing when necessary [17]. Include both intronic and exonic variants across hundreds of genes.

- Tool Configuration: Run each annotation tool (VEP, Alamut Batch, ANNOVAR, Illumina Connected Annotations) with consistent database versions (e.g., RefSeq GCF_000001405.40, Ensembl release 110) [18].

- HGVS Nomenclature Validation: Use validation tools like Mutalyzer (v2.0.35) to verify outputted data against standard HGVS nomenclature guidelines [17].

- Accuracy Calculation: Compare tool-generated annotations against ground-truth classifications across three categories: genomic notation, coding notation ("cNomen"), and protein notation ("pNomen") [18].

- Performance Benchmarking: Execute all tools on identical hardware (e.g., AWS EC2 c5.4xlarge) with the same input VCF files containing 6-7 million variants to measure processing speed [18].

Quality Control Measures:

- Manual review of variants using visualization tools like Alamut Visual (v2.15) and Integrative Genomics Viewer (IGV v2.10.2) [17]

- Right-alignment to coding and protein sequences in accordance with HGVS standards [18]

- Canonical transcript identification using MANE or established heuristic methods [18]

Visualization of Database Workflows and Relationships

Viral Genome Clustering with Vclust

The Vclust workflow integrates three specialized components that enable ultrafast and accurate clustering of viral genomes at scale. The following diagram illustrates the sequential processing stages and their relationships:

Vclust Computational Workflow

The Vclust workflow begins with Kmer-db 2, which performs initial k-mer-based estimation of sequence identity across all genome pairs using proportional k-mer sampling rather than fixed-sized sketching [13]. This preserves relationships between sequence lengths and enables processing of tens of millions of sequences through sparse matrix implementation. The resulting candidate pairs proceed to LZ-ANI, which employs Lempel-Ziv parsing to identify local alignments and calculate both ANI and aligned fraction (AF) measures with high sensitivity [13]. Finally, Clusty implements six clustering algorithms optimized for sparse distance matrices containing millions of genomes, producing virus operational taxonomic units (vOTUs) compliant with ICTV and MIUViG standards [13].

Variant Annotation Data Flow

The functional annotation of genomic variants follows a structured pipeline that transforms raw variant calls into biologically meaningful annotations. The following diagram outlines the key processing stages:

Variant Annotation Pipeline

The annotation pipeline begins with Transcript Intersection, where variants are mapped to overlapping transcripts using interval arrays, with adjustments for discrepancies between transcript and genomic reference sequences [18]. The Consequence Prediction stage then marks overlapping exons and introns, generates HGVS-compliant coding and protein nomenclature ("cNomen" and "pNomen"), and provides sequence ontology consequences for each variant [18]. Finally, Functional Annotation integrates information from external databases including population frequencies (gnomAD), clinical variants (ClinVar), predictive scores (SpliceAI, PrimateAI-3D), and gene information (OMIM, ClinGen) to produce comprehensively annotated variants ready for interpretation [18].

Research Reagent Solutions

Table 4: Essential Research Reagents and Computational Tools for Viral Genomics

| Resource | Type | Primary Function | Application Context |

|---|---|---|---|

| Vclust | Software Tool | Viral genome clustering & ANI calculation | Taxonomic classification of metagenomic viruses [13] |

| Illumina Connected Annotations | Software Tool | Variant annotation with HGVS nomenclature | Population-scale variant interpretation [18] |

| VEP (Variant Effect Predictor) | Software Tool | Open-source variant annotation | Clinical variant analysis & annotation [17] |

| Alamut Batch | Software Tool | Commercial variant annotation | Clinical laboratories requiring HGVS compliance [17] |

| IMG/VR Database | Data Resource | Curated viral metagenomes | Reference database for viral sequence comparison [13] |

| Viro3D | Data Resource | AI-predicted viral protein structures | Structural virology & vaccine design [16] |

| GISAID | Data Resource | Pandemic virus sequences | Real-time pathogen tracking & surveillance [15] |

| INSDC | Data Resource | Comprehensive nucleotide data | General-purpose genomic research [15] |

| RefSeq & Ensembl | Data Resource | Reference transcripts & gene models | Variant annotation & consequence prediction [18] |

| HGVS Standards | Specification | Nomenclature guidelines | Standardized variant description [17] |

The evolving landscape of viral genomic databases and annotation tools reflects the field's rapid response to both technological opportunities and practical challenges encountered during recent public health emergencies. Performance benchmarks clearly demonstrate that modern tools like Vclust for genome clustering and Illumina Connected Annotations for variant annotation provide significant advantages in accuracy, speed, and scalability compared to earlier solutions [13] [18]. These advancements come at a critical time when viromics studies are generating data at an unprecedented scale, with tools now capable of processing millions of genomes and billions of variants within practical timeframes [13] [18].

The development of specialized resources like Viro3D for structural predictions and emerging platforms like Pathoplexus for open data sharing indicates a maturation of the database ecosystem, addressing specific research needs beyond simple sequence storage [16] [15]. However, challenges remain in governance models and data access policies, as highlighted by controversies surrounding established platforms like GISAID [15]. As viral genomics continues to evolve, the integration of AI-powered structural predictions [16], increasingly efficient clustering algorithms [13], and population-scale annotation pipelines [18] will collectively enhance our ability to respond to emerging viral threats and accelerate the development of targeted antiviral therapies.

This guide provides an objective comparison of how major viral genomic databases adhere to the FAIR Principles (Findable, Accessible, Interoperable, and Reusable). The evaluation focuses on the Reference Viral Database (RVDB) alongside other common resources, utilizing a framework of quantitative metrics and experimental data relevant to researchers conducting viral detection and discovery in biologics and drug development. The 2025 refinement of RVDB demonstrates significant advancements in computational efficiency and detection accuracy, offering a contemporary benchmark for FAIR compliance in specialized genomic databases [19].

The FAIR Guiding Principles establish a framework for enhancing the utility of digital assets by emphasizing machine-actionability, a critical requirement for handling the volume and complexity of modern viral sequence data [20] [21]. For researchers and drug development professionals, FAIR compliance ensures that viral databases are not merely repositories but active resources that integrate seamlessly into high-throughput sequencing (HTS) bioinformatics pipelines. The core challenge in the field is balancing comprehensive data collection with computational efficiency and accurate annotation to avoid false positives/negatives in adventitious virus detection [19]. This evaluation uses the FAIR principles as a consistent lens to compare database performance and functionality.

Quantitative Comparison of Viral Databases

The following tables summarize a comparative analysis of key viral databases based on standardized FAIR metrics. The assessment of RVDB incorporates recent performance data from its 2025 refinement [19].

Table 1: General Database Characteristics and FAIR Alignment

| Database Feature | Reference Viral Database (RVDB) | NCBI RefSeq Viral | GenBank (nr/nt) |

|---|---|---|---|

| Primary Scope | Comprehensive viral, viral-like, and viral-related sequences; phages excluded [19] | Curated, full-length viral genomes [19] | All public sequences, including partial genomes and cellular sequences [19] |

| Redundancy | Clustered (98% similarity) and Unclustered versions available [19] | Low redundancy | High redundancy |

| Cellular Sequence Content | Actively reduced [19] | Not applicable (viral only) | High (can obscure viral detection) [19] |

| Phage Sequences | Excluded to reduce false positives from vectors/adapters [19] | Included | Included |

| SARS-CoV-2 Sequence Quality | Implemented quality-check step to exclude low-quality genomes [19] | High-quality curated sequences | Varies (includes all submissions) |

Table 2: Performance Metrics in Virus Detection Scenarios

| Performance Metric | RVDB (Post-2025 Refinement) | Pre-Refinement RVDB / Other Databases | Experimental Context |

|---|---|---|---|

| Computational Time | Reduced | Higher | HTS data analysis for broad virus detection in a biologics sample [19] |

| Viral Detection Accuracy | Increased | Lower | Detection of a novel rhabdovirus in Sf9 cell line; low-abundance viral hits are more detectable [19] |

| False Positive Rate | Reduced due to removal of misannotated non-viral sequences and phages [19] | Higher, particularly from cellular and phage sequence contamination [19] | Bioinformatics analysis of HTS data from biological products [19] |

| Proportion of Misannotated Sequences | Systematically reduced via semantic pipeline and automated annotation [19] | Higher (e.g., nr/nt database known to have contamination) [19] | Automated pipeline for distinguishing viral from non-viral sequences [19] |

Experimental Protocols for FAIRness Assessment

The methodology for assessing FAIR adherence and performance in viral databases involves a combination of automated tool-based evaluation and empirical benchmarking.

Protocol 1: Automated FAIR Metric Assessment

This protocol evaluates a database's technical compliance with FAIR principles using specialized software tools.

- Objective: To quantitatively measure the level of FAIR implementation for a given digital object (e.g., a viral database) using community-developed metrics [22].

- Tools: Automated assessment tools such as F-UJI, FAIR-Checker, and FAIR-Enough [22].

- Procedure:

- Input: The database's persistent identifier (e.g., its DOI or accessible URL) is provided to the assessment tool.

- Automated Testing: The tool runs a series of scripted tests against a set of predefined metrics for each FAIR principle. For example:

- Findability (F): Tests for the presence of a globally unique identifier and rich, searchable metadata [23].

- Accessibility (A): Checks if data is retrievable via a standardized, open protocol and that metadata remains accessible even if data is not [23].

- Interoperability (I): Evaluates the use of formal, shared languages and vocabularies for knowledge representation [23].

- Reusability (R): Assesses the presence of a clear usage license, detailed provenance, and adherence to community standards [23].

- Output: The tool generates a report or scorecard indicating compliance levels for each tested metric.

- Limitations: Different tools may use varying metrics and interpretations, leading to potentially confusing results. This highlights the need for community-standardized metrics [24] [22].

Protocol 2: Empirical Benchmarking for Viral Detection

This protocol assesses the practical performance of a viral database in a real-world HTS analysis scenario.

- Objective: To compare the computational efficiency and detection accuracy of different viral databases when used to analyze HTS data from a complex biological sample [19].

- Sample Preparation: HTS data is generated from a test sample, such as a biologics product or a cell line (e.g., Sf9 insect cell line), which may contain known or novel adventitious viruses [19].

- Bioinformatics Analysis:

- The HTS reads are queried against the target database (e.g., RVDB, GenBank nr/nt) using a standard alignment tool like BLAST [19].

- The analysis is performed on identical computational hardware to ensure fair comparison.

- Metrics Collection:

- Computational Time: Total time required to complete the analysis for each database.

- Accuracy: Number of true positive viral hits identified versus the number of false positives (e.g., hits to cellular sequences or misannotated entries) [19].

- Sensitivity: Ability to detect low-abundance viral sequences or novel viruses distantly related to known references [19].

Essential Research Reagent Solutions

The following tools and databases are critical for conducting rigorous FAIRness assessments and viral detection studies.

Table 3: Research Reagent Solutions for Viral Database Evaluation

| Tool / Database | Type | Primary Function in Evaluation |

|---|---|---|

| F-UJI | Automated FAIR Assessment Tool | Provides programmatic evaluation of a digital resource's compliance against community-accepted FAIR metrics [22]. |

| FAIR-Checker | Automated FAIR Assessment Tool | An alternative tool for automated FAIR principle testing, allowing for comparison of results between different assessment systems [22]. |

| Reference Viral Database (RVDB) | Specialized Viral Sequence Database | A FAIR-enhanced database used as a test subject and a benchmark for evaluating viral detection performance and computational efficiency [19]. |

| NCBI nr/nt Database | Comprehensive Sequence Collection | Serves as a baseline for comparison, highlighting the challenges of non-FAIR data (e.g., high cellular content, redundancy) in HTS analysis [19]. |

| BBTools Suite | Bioinformatics Software Package | Used in database refinement pipelines for tasks like sequence filtering (e.g., filterbyname.sh), directly supporting the creation of more interoperable and reusable data [19]. |

| CD-HIT-EST | Bioinformatics Clustering Tool | Used to reduce sequence redundancy in databases, directly supporting the Findability and Accessibility principles by streamlining the data [19]. |

The adherence to FAIR principles is a decisive factor in the functional utility of viral databases for modern research and drug development. The 2025 refinements to the Reference Viral Database (RVDB), including its Python 3 pipeline transition, rigorous sequence curation, and phage removal, demonstrate a direct correlation between FAIR implementation and enhanced performance metrics such as reduced computational time and increased detection accuracy [19]. While automated FAIR assessment tools provide crucial technical compliance scores, their varying methodologies underscore the need for continued community standardization [24] [22]. For researchers, selecting a viral database that rigorously applies FAIR principles is no longer optional but essential for ensuring efficient, accurate, and reproducible results in HTS-based viral safety and discovery programs.

Leveraging Viral Databases in Research: From Antiviral Discovery to Outbreak Surveillance

The ongoing threat of emerging and re-emerging viral pandemics highlights an urgent need for therapeutic preparedness. In this landscape, broad-spectrum antivirals (BSAs)—compounds capable of inhibiting multiple viruses—and BSA-containing combinations (BCCs) represent crucial strategic resources that can fill the therapeutic void between virus identification and vaccine development [25]. The systematic discovery and analysis of these compounds requires specialized computational resources. Among these, DrugVirus.info 2.0 has emerged as a dedicated integrative portal designed specifically to support antiviral drug repurposing efforts. This guide provides an objective comparison of its functionality, data scope, and analytical capabilities against other research paradigms, supporting a broader thesis on viral database functionality in modern antiviral discovery.

DrugVirus.info 2.0: Core Architecture and Capabilities

DrugVirus.info 2.0 is an integrative data portal that significantly expands upon its initial version, serving as a dedicated repository and analytical toolbox for BSAs and BCCs. Its primary mission is to provide an immediate resource for responding to unpredictable viral outbreaks with substantial public health and economic burdens [25].

The portal's architecture is built around two specialized analytical modules that enhance its utility for research applications:

- The Interactive Screening Analysis Module: Allows users to upload and computationally analyze their own antiviral drug or combination screening data alongside published datasets for comparative validation and contextual interpretation.

- The Structure-Activity Relationship (SAR) Exploration Module: Enables researchers to investigate the relationship between chemical structures of BSAs and their antiviral activity, providing critical insights for rational drug design and optimization [25].

This specialized infrastructure positions DrugVirus.info as a targeted resource for investigators seeking to repurpose existing compounds or develop novel treatments for emerging viral threats.

Essential Research Reagent Solutions

The following table details key reagents and computational tools referenced in broad-spectrum antiviral screening, which form the foundational elements for research in this field.

| Research Reagent / Tool | Function in BSA Research |

|---|---|

| DrugVirus.info 2.0 Portal | Integrative platform for BSA and BCC data exploration and analysis [25] |

| Primary Human Stem Cell-based Models (e.g., Mucociliary Airway Epithelium) | Physiologically relevant ex vivo systems for evaluating antiviral compound efficacy [26] |

| Fluorescent Protein-Expressing Viral Vectors (e.g., RCAd11pGFP, rRVFVDNSs::Katushka) | Enable quantitative high-throughput screening of compound libraries by reporting viral replication levels [26] |

| Merck Mini Library | A set of 80 de-prioritized research compounds provided for repurposing screens across therapeutic areas [26] |

| Deep Learning Models (e.g., LGCNN) | Computational frameworks for rapid drug screening by integrating drug molecular structure and multi-target interaction features [27] |

Comparative Analysis of Antiviral Discovery Platforms

The landscape of platforms and approaches for identifying broad-spectrum antivirals is diverse. The following table provides a systematic comparison of DrugVirus.info 2.0 with other established research paradigms, based on their primary functions, data types, and outputs.

| Platform/Approach | Primary Function | Data Type & Scope | Key Outputs |

|---|---|---|---|

| DrugVirus.info 2.0 | BSA & BCC data repository and interactive analysis [25] | Curated experimental data on compound activity against multiple viruses [25] | SAR insights, combination synergy data, user data context |

| Open Innovation Crowd-Sourcing | Identification of novel drug classes via collective intelligence [26] | Proprietary or shared compound libraries screened in diverse assays | New BSA leads (e.g., Diphenylureas), in vitro and in vivo efficacy data [26] |

| Pharmacovigilance Databases (e.g., EudraVigilance) | Post-market drug safety monitoring [28] | Spontaneous reports of Adverse Drug Reactions (ADRs) from real-world use | Safety profiles, signal detection for specific ADRs (e.g., hepatotoxicity) [28] |

| Deep Learning Models (e.g., LGCNN) | Predictive drug screening for multi-target activity [27] | Chemical structures and drug-target interaction data at local and global levels | Prioritized compound lists with predicted activity against related viruses (e.g., coronaviruses) [27] |

Analysis of Functional Distinctions

The comparative analysis reveals distinct and complementary roles across platforms:

- DrugVirus.info 2.0 excels as a centralized knowledge base and validation tool, integrating curated experimental results to guide hypothesis-driven research. Its value lies in synthesizing pre-clinical efficacy data from structured experiments [25].

- Open Innovation Initiatives, such as the one that identified the diphenylurea compound class, function as discovery engines. These approaches are highly effective for generating novel lead compounds, as demonstrated by the identification of a chemical class with activity against SARS-CoV-2, adenovirus, dengue, herpes, and influenza viruses [26].

- Pharmacovigilance Databases provide a critical safety lens, offering real-world evidence on adverse events that may not be apparent in controlled clinical trials. For example, one analysis of EudraVigilance data highlighted that remdesivir was associated with a high proportion (84%) of serious adverse events and showed significant reporting signals for hepatobiliary, renal, and cardiac disorders [28].

- Deep Learning Models represent the predictive frontier, capable of transcending single-target predictions. Frameworks like LGCNN show potential for rapid emergency response by predicting multi-target interactions and suggesting compounds that may act through "anti-coronavirus universal pathways" [27].

Experimental Protocols for BSA Evaluation

The evaluation of broad-spectrum antiviral candidates relies on a multi-stage workflow incorporating in vitro, ex vivo, and in vivo models to establish efficacy and potential for clinical translation.

Workflow Diagram for BSA Assessment

Protocol Details

Primary Antiviral Screening

This initial high-throughput screen identifies candidate compounds. A compound library is serially diluted in DMSO to create a concentration range (e.g., from 100 μM down to 0.4 μM using nine two-fold steps). Cells (e.g., A549) are seeded in 96-well plates and subsequently infected with replication-competent viral vectors expressing fluorescent markers (e.g., GFP for adenovirus, Katushka for RVFV) in the presence of the compounds. Viral replication is quantified 16-24 hours post-infection by measuring viral-specific fluorescence. Benzavir-2, a known preclinical compound with activity against both HAdV and RVFV, serves as a positive control [26].

Plaque Reduction Assay

This protocol confirms and quantifies antiviral activity against specific viruses of interest. For SARS-CoV-2, VeroE6 cells are seeded in 12-well plates and infected with a defined number of plaque-forming units (e.g., 250 pfu/well) together with serial dilutions of the candidate compound. After an incubation period with a semi-solid overlay medium (e.g., containing carboxymethyl cellulose to restrict viral spread), the cells are fixed and stained (e.g., with crystal violet). The number of viral plaques is counted to determine the compound's concentration-dependent inhibitory effect [26].

Advanced Ex Vivo and In Vivo Validation

Promising compounds progress to more physiologically relevant models. For SARS-CoV-2, this includes a primary human stem cell-based mucociliary airway epithelium model, which closely mimics the human respiratory tract. Finally, efficacy is confirmed in vivo, for example in a murine SARS-CoV-2 infection model, providing critical data on the compound's performance in a living organism before clinical development [26].

Mechanisms of Broad-Spectrum Antiviral Activity

Broad-spectrum antivirals achieve their effect by targeting either common viral components or host cellular factors that multiple viruses depend on for replication. The strategic rationale for these two approaches is summarized below.

Direct-Acting Antiviral (DAA) Strategies

Direct-acting antivirals typically provide a narrower spectrum of activity but are a cornerstone of antiviral therapy. They target viral elements such as:

- Viral Polymerases: The catalytic units of RNA-dependent RNA polymerases (RdRp) are structurally conserved across many viral families, making them prime targets. Nucleoside/Nucleotide analogs like remdesivir and ribavirin incorporate into the growing RNA chain, causing premature termination or lethal mutagenesis [29].

- Viral Proteases: Viruses like SARS-CoV-2 and HIV require proteases (e.g., Mpro, 3CLpro) to process their polyproteins. Inhibitors such as nirmatrelvir are designed to specifically block these enzymes [28] [29].

Host-Targeting Antiviral (HTA) Strategies

Host-targeting approaches aim for a broader spectrum by interfering with cellular pathways hijacked by multiple, often unrelated, viruses. This can include:

- Cellular Enzymes and Pathways: Targeting host factors like cytochrome P450 enzymes or the oxysterol-binding protein (OSBP) pathway can disrupt the replication cycle of multiple viruses simultaneously [30] [29].

- Viral Entry Mechanisms: Compounds can block viral entry by interacting with cellular receptors or co-factors required by multiple viruses, such as the ACE2 receptor used by SARS-CoV-2 [29].

The comparative analysis presented in this guide underscores a critical paradigm: no single platform or strategy fully addresses the complex challenge of broad-spectrum antiviral development. Each major approach offers distinct advantages.

DrugVirus.info 2.0 establishes its unique value not as a primary discovery tool, but as an integrative hub for validation and analysis. Its strength lies in synthesizing experimental data on BSA and BCC efficacy, enabling SAR studies, and allowing researchers to contextualize their own findings within the broader research landscape [25]. In contrast, open innovation models excel at de novo lead generation, as demonstrated by the identification of the diphenylurea class [26], while pharmacovigilance databases provide the indispensable real-world safety profiles that these other platforms lack [28].

For pandemic preparedness, a synergistic strategy is paramount. The future of antiviral drug repurposing lies in leveraging the predictive power of AI models for rapid candidate identification [27], the validated efficacy data from portals like DrugVirus.info for triaging leads, and the comprehensive safety data from pharmacovigilance to de-risk clinical translation. Together, these interconnected resources form a powerful defense network, enhancing our capability to respond rapidly and effectively to future viral threats.

The field of virology is undergoing a profound transformation, driven by the integration of artificial intelligence (AI) and machine learning (ML) with large-scale biological databases. This synergy is creating powerful predictive models that accelerate our understanding of viral infectivity and pave the way for more efficient drug discovery pipelines. AI's ability to analyze complex, high-dimensional data from diverse sources—including genomic sequences, protein structures, and clinical records—is enabling researchers to uncover patterns and relationships that were previously inaccessible through traditional methods. The emergence of comprehensive, AI-powered databases is setting the stage for a new era in viral research and therapeutic development, moving from reactive approaches to proactive, predictive viral management [16].

This guide provides an objective comparison of the current landscape of AI-driven databases and predictive models, focusing on their functionality, performance, and application in infectious disease research. We examine the core technologies powering these platforms, evaluate their predictive capabilities through experimental data, and detail the methodologies required to validate their performance. For researchers, scientists, and drug development professionals, this analysis offers a practical framework for selecting and implementing these powerful tools in the race against evolving viral threats.

Comparative Analysis of AI-Enhanced Viral Databases and Predictive Platforms

The ecosystem of databases and analytical platforms available to virologists and drug discovery researchers is rapidly expanding. The table below provides a structured, objective comparison of key resources, highlighting their primary content, AI/ML integration, and research applications.

Table 1: Comparative Analysis of Viral and Biomedical Databases with AI/ML Applications

| Database/Platform Name | Primary Content & Data Type | AI/ML Integration & Specialized Features | Key Applications in Viral/Infectious Disease Research |

|---|---|---|---|

| Viro3D [16] | Structural models for 85,000 proteins from 4,400 human and animal viruses. | AI-powered structural prediction and analysis; reveals evolutionary origins and relationships. | Accelerated computational design of antiviral drugs and vaccines; investigation of viral evolution (e.g., SARS-CoV-2 origins). |

| NAR Database Issue 2025 [31] | 185 papers spanning 73 new and 101 updated biological databases (e.g., EXPRESSO, BFVD, ClinVar, PubChem, DrugMAP). | Curated collection includes many AI-ready resources; features structural predictions (e.g., ASpdb, BFVD) and omics data. | Foundational resource for finding specialized databases for pathogen detection, genomic variation, drug targets, and multi-omics analysis. |

| BFVD (BetaFlex Viral Database) [31] | AlphaFold-predicted structures of viral proteins. | Leverages deep learning (AlphaFold) for high-quality protein structure prediction. | Provides structural insights for viral proteins, aiding in epitope mapping and drug target identification. |

| PubChem Bioassay [32] | Large-scale bioactivity data from High-Throughput Screening (HTS); chemical compounds against specific biological targets. | Primary data source for training ML/DL models to predict anti-pathogen activity of chemical compounds. | AI-based screening for anti-viral and anti-pathogen compounds; dataset for building predictive models in drug discovery. |

| STRING / KEGG [31] | Metabolic and signaling pathways; protein-protein interaction networks. | Network analysis and pathway enrichment; integrates with ML models for systems biology. | Understanding virus-host interactions; identifying host-directed therapy targets; analyzing infection impact on cellular processes. |

Performance Benchmarking: AI Models in Drug Discovery

A critical challenge in AI-driven drug discovery is managing the inherent imbalance in bioassay datasets, where inactive compounds vastly outnumber active ones. A recent 2025 study provides a quantitative comparison of various AI models and data-handling techniques, offering crucial performance benchmarks for researchers [32].

Experimental Protocol for Model Evaluation

The following methodology was used to generate the comparative performance data:

- Dataset Curation: Models were trained on four highly imbalanced PubChem bioassay datasets targeting HIV, Malaria, Human African Trypanosomiasis, and COVID-19. The Imbalance Ratio (IR), defined as the ratio of active to inactive molecules, ranged from 1:82 to 1:104 [32].

- Model Selection: The study evaluated five classic Machine Learning (ML) algorithms—Random Forest (RF), Multi-Layer Perceptron (MLP), K-Nearest Neighbors (KNN), eXtreme Gradient Boosting (XGBoost), and Naive Bayes (NB)—and six Deep Learning (DL) models, including Graph Convolution Networks (GCN) and transformer-based models like ChemBERTa [32].

- Resampling Techniques: To address data imbalance, several data-level techniques were applied and compared:

- ROS (Random OverSampling): Randomly duplicating instances from the minority (active) class.

- RUS (Random UnderSampling): Randomly removing instances from the majority (inactive) class.

- SMOTE & ADASYN: Generating synthetic samples of the minority class.

- K-Ratio RUS (Novel): A systematic undersampling approach to create specific, optimal IRs (1:50, 1:25, 1:10) rather than perfect balance (1:1) [32].

- Performance Metrics: Models were evaluated using multiple metrics, including ROC-AUC, Balanced Accuracy, Matthews Correlation Coefficient (MCC), Precision, Recall, and F1-score, with a focus on MCC and F1-score for a robust assessment of imbalanced classification performance [32].

Table 2: Performance Benchmark of AI Models and Data Resampling Techniques on Imbalanced Drug Discovery Datasets

| Model / Data Treatment | Key Performance Metric (MCC range across datasets) | Relative Inference Speed | Key Strengths | Key Limitations |

|---|---|---|---|---|

| Random Forest (RF) | Medium to High (Varies with resampling) | Fast | High interpretability; robust to noise. | Performance highly dependent on dataset resampling. |

| Multi-Layer Perceptron (MLP) | Medium (Varies with resampling) | Medium | Good for complex, non-linear relationships. | Requires extensive hyperparameter tuning. |

| Graph Neural Networks (GCN, GAT) | Medium to High | Slow (Pre-training) / Fast (Inference) | Directly learns from molecular graph structure. | Computationally intensive; requires significant data. |

| Transformer Models (ChemBERTa, MolFormer) | Medium to High | Slow (Pre-training) / Medium (Inference) | State-of-the-art on many benchmarks; pre-trained on vast chemical libraries. | "Black-box" nature; high computational resource demand. |

| Original Imbalanced Data | Very Low (Near or below zero) | N/A | Baseline performance. | Severe bias towards predicting inactive compounds. |

| Random OverSampling (ROS) | Low to Medium | N/A | Improves recall of active compounds. | Can lead to overfitting; significantly reduces precision. |

| Random UnderSampling (RUS) | Medium to High | N/A | Consistently enhanced MCC & F1-score across datasets. | Potential loss of information from majority class. |

| K-Ratio RUS (1:10) | Highest | N/A | Optimal balance between true positive and false positive rates. | Requires tuning to find optimal imbalance ratio. |

Key Findings from Experimental Data

The comparative analysis revealed several critical insights for building effective predictive models:

- Addressing Imbalance is Crucial: Training models on the original, highly imbalanced data resulted in poor performance (MCC near or below zero), confirming that standard algorithms are biased toward the majority (inactive) class [32].

- Systematic Undersampling Outperforms: The novel K-Ratio RUS approach, particularly with a moderate imbalance ratio of 1:10, achieved the best overall performance. It provided an optimal balance, significantly enhancing the models' ability to identify active compounds (recall) while maintaining a reasonable level of precision, leading to superior MCC and F1-scores [32].

- Model Choice is Context-Dependent: While advanced models like Transformers and Graph Networks showed high performance, simpler models like Random Forest performed robustly when combined with proper data resampling techniques, offering a good balance of performance, speed, and interpretability [32].

Workflow and Signaling Pathways in AI-Driven Viral Analysis

The application of AI in virology follows a structured pipeline, from data acquisition to clinical prediction. The diagram below illustrates the integrated workflow of metagenomic viral discovery and subsequent AI-driven analysis for infectivity and drug discovery.

AI-Driven Viral Discovery and Analysis Pipeline

The workflow integrates key technological and methodological advances:

- Unbiased Discovery: Shotgun metagenomics allows for the identification of novel viruses without prior knowledge, moving beyond the limitations of culture-based methods and targeted PCR [33].

- AI-Powered Annotation: Tools like VirSorter2 and DeepVirFinder use machine learning to identify viral sequences in complex metagenomic data. Subsequent structural annotation with AI-powered databases like Viro3D and BFVD provides critical functional insights, such as identifying Auxiliary Metabolic Genes (AMGs) that viruses use to reprogram host metabolism [33].

- Predictive Modeling: Extracted features feed into ML models trained to predict key outcomes like infectivity and drug activity. The integration of systematic data resampling techniques (e.g., K-Ratio RUS) is a critical step to ensure model accuracy and generalizability [32].

Building and applying predictive AI models for infectivity and drug discovery requires a suite of computational tools, data resources, and analytical platforms.

Table 3: Essential Research Reagents and Computational Tools for AI-Driven Viral Research

| Tool / Resource Name | Type | Primary Function in Research | Key Features / Notes |

|---|---|---|---|

| VirSorter2 & DeepVirFinder [33] | Bioinformatics Software (AI Tool) | Identification of viral sequences from complex metagenomic assemblies. | Uses machine learning to detect novel viruses; critical for expanding the known virosphere. |

| Viro3D [16] | Specialized Database | Provides AI-predicted structural models for thousands of viral proteins. | Enables structural analysis and drug target identification without wet-lab structure determination. |

| PubChem Bioassay [32] | Public Chemical/Bioactivity Database | Source of large-scale, imbalanced datasets for training AI models to predict anti-pathogen activity. | Essential for benchmarking and training predictive models in drug discovery. |

| Random Forest (RF) & XGBoost [32] | Machine Learning Algorithm | Classic, interpretable ML models for building robust predictors from bioassay and omics data. | Often achieve performance comparable to more complex DL models, especially with proper data resampling. |

| K-Ratio Random Undersampling (K-RUS) [32] | Data Pre-processing Methodology | Optimizes imbalance ratio in training data (e.g., to 1:10) to significantly boost model performance. | A simple yet highly effective technique to mitigate bias in drug discovery datasets. |

| Graph Neural Networks (GCN, GAT) [32] | Deep Learning Algorithm | Learns directly from the molecular graph structure of compounds for activity prediction. | Captures rich structural information; well-suited for molecular property prediction. |

| IMB/VR, RefSeq, RVDB [33] | Curated Reference Database | Provides reference sequences for taxonomic classification and functional annotation of viral reads. | Quality and breadth of databases directly impact the reduction of "viral dark matter." |

| Illumina, Oxford Nanopore [33] | Sequencing Technology | Generates the primary DNA/RNA sequence data from environmental or clinical samples. | Short-read (Illumina) and long-read (Nanopore) technologies are often used complementarily. |

Viral taxonomy, the science of classifying viruses into a standardized hierarchical system, is fundamental to virology research, outbreak tracking, and drug development. Unlike cellular organisms, viruses lack universal marker genes, making their classification inherently complex and reliant on specialized computational tools. The International Committee on Taxonomy of Viruses (ICTV) serves as the global authority that establishes and maintains the official framework for virus taxonomy [34]. The rapid expansion of sequenced viral genomes underscores the critical need for automated taxonomic assignment pipelines that can keep pace with new discoveries and integrate seamlessly with the evolving ICTV framework. This comparison guide objectively evaluates the performance of one such modern tool, the Viral Taxonomic Assignment Pipeline (VITAP), against other established methods, focusing on their integration with ICTV standards and their utility for researchers and drug development professionals.

The ICTV Framework and Database Integration

The ICTV provides the foundational rules and nomenclature for virus classification through the International Code of Virus Classification and Nomenclature (ICVCN) [34]. The committee's work ensures stability and avoids confusion in taxon naming, which is crucial for clear scientific communication. The official taxonomy is curated in the Master Species List (MSL), which is accessible online and regularly updated [35].

A significant recent development is the adoption of binomial nomenclature for virus species names. As of April 2025, NCBI Taxonomy has begun implementing these changes, introducing over 7,000 new binomial species names to improve consistency and precision [36]. For example, what was previously known as "Human immunodeficiency virus 1" is now classified as species "Lentivirus humimdef1" within the genus "Lentivirus" [36]. This shift has profound implications for databases and analytical tools, which must update their reference sets to remain current. Effective taxonomic pipelines must therefore be designed to automatically synchronize with these official ICTV releases to provide accurate and up-to-date assignments.

VITAP (Viral Taxonomic Assignment Pipeline) is a recently developed tool designed to address the challenges of classifying both DNA and RNA viruses from metagenomic and metatranscriptomic data. As published in Nature Communications, VITAP integrates alignment-based techniques with graph theory to achieve high-precision classification and provides a confidence level for each taxonomic assignment [9] [37]. A key feature of VITAP is its ability to automatically update its reference database in sync with the latest ICTV releases, ensuring researchers have access to the most current taxonomy [9]. It is capable of classifying viral sequences as short as 1,000 base pairs up to the genus level, making it suitable for working with fragmented data from metagenomic studies [37].

Comparative Performance Analysis

Benchmarking studies are essential to evaluate the real-world performance of bioinformatic tools. VITAP's developers conducted a rigorous tenfold cross-validation comparing its performance against vConTACT2, another established pipeline, using viral reference genomic sequences from the VMR-MSL [9].

Key Performance Metrics

The following table summarizes the core performance metrics for VITAP and vConTACT2 at the family and genus levels.

Table 1: Overall Performance Metrics Comparison

| Metric | Taxonomic Level | VITAP | vConTACT2 |

|---|---|---|---|

| Average Accuracy | Family & Genus | >0.9 [9] | >0.9 [9] |

| Average Precision | Family & Genus | >0.9 [9] | >0.9 [9] |

| Average Recall | Family & Genus | >0.9 [9] | >0.9 [9] |

| Average Annotation Rate (1-kb sequences) | Family | 0.53 higher [9] | Baseline |

| Average Annotation Rate (1-kb sequences) | Genus | 0.56 higher [9] | Baseline |

| Average Annotation Rate (30-kb sequences) | Family | 0.43 higher [9] | Baseline |

| Average Annotation Rate (30-kb sequences) | Genus | 0.38 higher [9] | Baseline |

The data shows that while both tools achieve high and comparable accuracy, precision, and recall, VITAP's principal advantage is its significantly higher annotation rate. This means VITAP can assign a taxonomic label to a substantially larger proportion of input sequences, which is critical for maximizing data utilization in virome studies.

Performance Across Viral Phyla

A tool's generalizability is tested by its performance across diverse viral groups. The annotation rates of VITAP and vConTACT2 were compared across several DNA and RNA viral phyla for both short (1-kb) and nearly complete (30-kb) genomes.

Table 2: Genus-Level Annotation Rate by Viral Phylum for 1-kb Sequences

| Viral Phylum (Example) | VITAP Annotation Rate | vConTACT2 Annotation Rate | Difference |

|---|---|---|---|

| Cressdnaviricota (ssDNA viruses) | Significantly Higher [9] | Baseline | +0.94 [9] |

| Phixviricota (Inoviridae phages) | Significantly Higher [9] | Baseline | +0.87 [9] |

| Cossaviricota (Papillomaviridae) | Higher [9] | Baseline | +0.13 [9] |

Table 3: Genus-Level Annotation Rate by Viral Phylum for 30-kb Sequences

| Viral Phylum (Example) | VITAP Annotation Rate | vConTACT2 Annotation Rate | Difference |

|---|---|---|---|

| Kitrinoviricota (Alphavirus, Hepacivirus) | Significantly Higher [9] | Baseline | +0.86 [9] |

| Artverviricota (Retroviruses) | Higher [9] | Baseline | +0.06 [9] |

| Preplasmiviricota (Herpesviruses) | Lower [9] | Baseline | -0.05 [9] |

The results demonstrate that VITAP achieves higher annotation rates for all RNA viral phyla and most DNA viral phyla, particularly with short sequences [9]. Its performance is robust across a wide spectrum of viruses, not just the prokaryotic dsDNA viruses that some older tools are optimized for. vConTACT2, while achieving a very high F1 score, does so at the cost of a much lower annotation rate, potentially leaving more data unclassified [9].

Experimental Protocols and Methodologies

To ensure the reproducibility of the benchmark results, this section outlines the key experimental protocols cited in the performance analysis.

Benchmarking Experiment: Tenfold Cross-Validation